What is Zero Trust & why is it so important? (Part 1)

Simon Morse

Technical Director Security, Versent

Simon Morse

Technical Director Security, Versent

Security is having a moment in the spotlight, thanks to the recent surge in decentralised work practices. People working from home share sensitive business data routinely across an ever-changing network of devices and platforms, so cybersecurity must encompass this new reality.

Zero Trust principles are a foundational plank of contemporary data security. In this series of blog posts, we’re going to talk about what Zero-Trust is and what it can teach us about good and bad digital security architecture. We’ll talk through some of the foundational concepts behind Zero Trust, and we’ll also look at how to implement it using budget and teams efficiently.

In this first article, we’ll start by defining Zero Trust. In article two, we’ll bust a few Zero-Trust myths, and then in the third article, we’ll describe some real-world applications to solve current problems.

What’s the origin of Zero Trust?

What’s driven the current movement toward Zero-Trust Architecture (ZTA), and how has it come to be viewed as a better solution?

Many forces drove organisations to think differently about security, and these had a common theme: de-perimeterisation. De-perimiterisation is a term introduced by the Jericho Forum in the early 2000s. The Jericho Forum published a series of whitepapers that foresaw the trend toward our current ubiquitous online economy with public cloud, SaaS, mobile apps, always-on connectivity, ubiquitous remote working, and IoT.

In their whitepapers, the Jericho Forum authors described irresistible economic forces deconstructing enterprise perimeters and blurring the borders between organisations. The authors predicted that new ways to collaborate, integrate specialised services and generally improve productivity were required. They foresaw that network-oriented security would disrupt business and government services across the globe and proposed that Trust was a core function of future Security.

But let’s go back for a minute to the early days of the internet and see how online security became a real-world necessity.

Commercial drivers of cybersecurity evolution

One of the early evolutions in the popularization of the internet was access to hard currency. The online economy created a bunch of problems for IT generally, but for security in particular. As organisations started opening up to financial transactions, a host of problems followed.

Financial institutions started delivering banking services online, and scammers quickly realised that there was a buck to be made. Phishing scams, browser-based attacks, identity fraud, phone porting, fake websites, password stuffing, money mules, romance scams, Nigerian princes and full-blown fake call centres popped up at an alarming rate.

Savvy cybercriminals started applying business principles to their criminal operations so that the separate functions of the criminal ecosystem were at arm’s length from each other. The call centres delivering their spiels, the hackers developing the malware, the mules being hired via “work from home” adverts on telephone poles were all contained in separate bubbles. Scammers even ran HR screenings on their candidate accomplices, testing if they were psychologically comfortable not questioning the details of their employment. Many people participate in international criminal enterprises without actually realising what they’re doing. Those that do cotton on usually don’t understand the impact or scale of the criminal operation. They’re happy to live with plausible deniability or convince themselves that insurers will mitigate the losses.

Through the mid-2000s, insider fraud became a major cybercrime category. Harvesting apparently innocent data like contact lists was easy for corrupt or misguided employees inside corporations. Small pieces of data, like an email password, can be enough to instigate a targeted attack or undertake an account takeover. This sort of attack became so lucrative that name and address or email and password combinations became hot commodities on the dark web. Some organisations that weren’t exposed to direct monetary fraud like Facebook and Sony became targets for hackers who wanted to harvest their customer data caches.

As levels of cybercrime grew exponentially through the 2000s, governments began to incrementally legislate obligations for corporations to try and claw back their citizens’ privacy. The legislative initiatives were well-intentioned, but without solid cybersecurity measures to support them, they had minimal impact on crime.

Network perimeter security

Network perimeters became a foundational security control more or less by default, but they were never designed for this purpose. In the early days of the IT revolution, organisations used to be fully air-gapped; contained within network boundaries that rarely extended beyond a single building. (It’s interesting to look back and remind ourselves that IT existed long before the internet and even before standards-based Open Systems.)

Network perimeters are blunt controls; ineffective against insider threats, but 100% effective at keeping outsiders out. We tend to forget these days just how expensive and bandwidth-constrained external network connectivity was in the internet’s early days. The idea of watching video of any sort delivered over the network (let alone cat videos for entertainment purposes) was inconceivable. Real-time streaming video conferencing was the stuff of science fiction. But hackers were also constrained in the same way, so cybercriminals used techniques like ‘war dialling’ modem banks and ‘phone phreaking’ alongside HTTP attacks over the internet.

Seeking a better approach to cybersecurity

In the early days of online systems, security was treated as a separate, discreet function. Starting in the mid-2000s, most companies hired specialist cybersecurity teams to solve their security problems. This ushered in a new generation of security specialists but also had an unfortunate side effect. Because it was considered a specialist function, most IT people didn’t think about security much. (I’ve talked about this phenomenon in one of my prior blog posts, so have a read of “DevSecOps – the what, why and how” if you’re interested in this stage of cybersecurity evolution.)

By the mid to late 2000s, the shortcomings of perimeter-based security were becoming clearly apparent. Online activity requires smooth integration of connected systems, and the movement toward de-perimeterisation was motivated by a need for more comprehensive, flexible security. Using network security as the primary defence against cyber-attacks was increasingly seen as mediocre architecture, but a viable alternative was yet to be found.

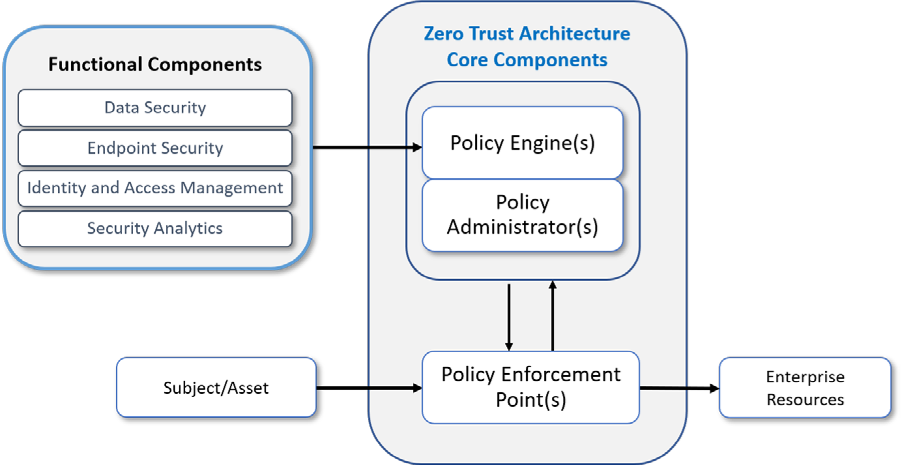

In 2010, economic research specialists Forrester began exploring the cybersecurity landscape as it related to business structures. Forrester’s work popularised terms like Zero-Trust Network Architecture (ZTNA), Microcore and Perimeter (MCAP), Data Acquisition Network (DAN) and Segmentation Gateway (SG). These concepts described ways of rebuilding computer networks with security as a foundational attribute. Importantly, this was primarily network-oriented thinking trying to defend against network-oriented problems. In 2019, Forrester developed these concepts into what they termed Zero-Trust eXtended (ZTX) architecture, which designates workloads, people, devices, and networks as key concepts but puts data at the centre of the frame.

Building on this redefinition of the importance of data in the cybersecurity landscape, organisations such as NIST and the NSA have published defined standards of the concepts involved. At the time of writing, NIST and NSA are actively seeking industry input around a number of defined use cases.

The exploration of data-focused security we’re discussing here largely pre-dates the COVID-19 pandemic, but the radical re-orientation of security structures instigated by decentralised work has brought it into mainstream consciousness.

How not to do Zero Trust

The COVID-19 crisis has thrown decentralised business structures and progressive cybersecurity into the spotlight. But when an overnight change in public perception collides with twenty-plus years of gradual cybersecurity evolution, some of the concepts tend to get oversimplified in the zeitgeist.

Many people in the cybersecurity industry baulk at the concept of Zero Trust. The concern is that we’re proposing to remove network defences without implementing an adequate alternative means of protection. Security specialists are typically even more sceptical than their non-specialist peers because they assume that systems are dynamic, that things will go wrong, and we need to define what’s required to recover from failure states.

Let’s concede that throwing away network security isn’t necessarily the best solution. As an alternative, let’s consider mandating a strict set of control objectives that everyone should adhere to. Compliance is a great way to establish a baseline cybersecurity posture for a system; it forces organisations to think in broad security terms and eliminate gaps. But compliance is static, so it generally does a poor job of evaluating “what if” scenarios like recovery capability. Compliance doesn’t provide a reasoned way to think about whether the threat scenarios for a particular system have been considered thoroughly. Compliance is indiscriminate. It promotes a universal, binary view of security that doesn’t accommodate questions about when “OK” needs to be “better” or when a security control is redundant.

In cybersecurity, trust levels are rarely at true “zero”, but rather a continuum from zero to some point at which it’s no longer viable to keep increasing it. Security professionals routinely use Threat Modelling as a fundamental technique to describe how systems interact with each other. Trust must be earned and provable in some pre-agreed manner through the implementation of controls, and the failure of a particular control can also be modelled to understand its criticality. Threat models allow thinking to be presented in a data-centric manner and rigorously describes the trust levels required at each point in the system based on identified threats. They can also be maintained and extended over time so that reasoned judgements about the cybersecurity implications of change can be evaluated.

From Theory to Practice

In this post, we’ve looked at the conditions that gave rise to Zero Trust as a cybersecurity principle. Now that we have a basic understanding of Zero Trust and some insight into where it came from, we can start talking about how it works in practice.

In my next blog post, we’ll look at the challenge of addressing security problems by investing and innovating in sophisticated network security capabilities. We’ll also deconstruct some Zero Trust myths, and in article three of this series, we’ll examine some examples of Zero Trust principles applied to real-world problems.

Want to learn more about Versent’s cybersecurity services and implementation of Zero Trust benchmarks? Get in touch with one of our expert security consultants.