How to get your data project right when you go cloud native

Ravindra Mijar

Senior Software Engineer, Versent

Ravindra Mijar

Senior Software Engineer, Versent

Understand your data

When doing ETL/Data projects, it is really important that you figure out what your data is. How it is structured, how it gets modified, how frequently it gets modified, what happens to your down-stream dependencies if your data formats change. If you start this way, you are already three steps ahead…

a) What’s the nature of your data?

Transactional data may be quite transient and may not always provide a clear picture of the current state to your application. Relatively static data on the other hand is typically used for reference purposes.

Your ETL’s characteristics and resiliency will depend on this. For e.g. if the ETL fails for whatever reason, how important is it for you to replay it immediately? Or will it be OK to run it at its next scheduled run?

You will have some tables that are quite large and chatty, whereas some tables that don’t see a lot of activity. ETLs for these can be different.

b) Is it PCI / PII?

Will there be any issues if the processing / hosting tools are in a particular geographical region? This is especially important when you are using cloud service providers like AWS. Some features / tools may be present only in certain regions. Do you need to cleanse the data on site before it gets processed?

c) How soon do you ned to move it to the target? Near real-time, or with a delay?

This question can also be framed as – what do you need to do to the data in the target system? Run long running analytical loads, or serve it as a data lake for hundreds of other consumers?

Clients may not be aware of the architectural implications of moving the data near real time and with a delay. It is important to understand this requirement so that the right technical choices are made. Going back to the fundamental question of – “if you need it in realtime, should you be looking for a streaming architecture instead?” may not be a bad idea. A system that moves data in near real-time will need greater resiliency, a highly available architecture and may even make it costly to implement.

Understand your source and its limitations

Data is being moved to either enhance it, run analytics on it, creating a replica of, or just because your source cannot keep up with the new demands on it. In all of the cases, it is important to understand the limitations of your source system.

Answers to the below questions will have implications on when you’re able to run ETLs.

· Will you be running queries on the source system to extract data?

· Will you be looking at transaction logs to recreate the operations? Aka. Change Data Capture (CDC)?

· Will you be copying the data in its entirety every time? Truncate + Load?

Understand your target and its pros and cons

For which ever reason you’re moving data to your target system, there’s no target system that will be devoid of any disadvantages. For e.g. AWS Redshift doesn’t enforce primary keys and doesn’t support indexes. This will mean you have to rethink how you load and use the data in your tables.

On the other hand, AWS Redshift does a vacuum immediately after a delete. Meaning you don’t have to think of housekeeping activities in some cases in your ETL. So, a comparative study of the pros and cons of various target systems is necessary to figure out what is a requirement and what’s a good-to-have.

Understand your ETL pipeline, it’s scheduling, failover

ETL pipelines, like any other processing construct will have failures. Other applications may not need to continue working even if they have a

failure. Not necessarily for ETLs. As an ETL developer you need to think of many things incase of failures:

a) How and where will the ETL maintain state?

Keeping it in the source data base or within its own system will both have impacts on the ETL design.

b) How will it resume a failed operation?

This is related to how you think of reconciliation between the source and target systems. For a truncate + load ETL, you don’t need to see what

state the target is in. If you re-run it from the last executed time, your target system will not go out of sync.

For a CDC ETL however, you need to think about what it means to re-run the ETL.

c) Data Lineage, Data Provenance, Monitoring and Scheduling

Your ETL tool will need to cater for these requirements as well. Offloading scheduling to another system / tool may seem easy at first glance. But, your ETL Jobs will become more complex because they will have to maintain the state of the system (source and / or target) when they start and end.

Automated alerts, continuous monitoring is crucial and will help build confidence in your solution.

Understand how all these fit together in AWS

One of the fundamental advantages of the AWS ecosystem is how easy it is to make things work together. This needs to be exploited to ensure your architecture is as robust as possible.

Some ETL tools may not be tuned to run in the cloud. For e.g. Simply having an EC2 instance in AWS to host your ETL tool is not sufficient. The tool should have the ability to use EC2 features like ‘using the instance IAM role’.

Explore and acknowledge the advantages limitations of the tools at your disposal

Any sort of cloud development needs a lot of tools an processes in place. Developers get used to these, and in many cases the alternatives for those processes and tools may become impractical. For e.g. ETL development tools that are visual in nature do not work well with git and pull requests reviews that are textual in nature.

Remember – the human aspects of data!

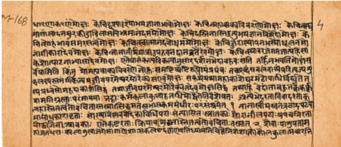

Data projects are like exploring ancient knowledge bases. They are best navigated with human help.

Having SME buy-in and ongoing support are crucial to ensure you get things right. It is very important that the SME is made available to the ETL developers whenever needed.