Producing higher quality software and getting it to production quicker by treating acceptance criteria as code

James Elsey

Microservices Engineer

James Elsey

Microservices Engineer

We share a common goal, but often see it from different perspectives.

Have you ever seen a feature go through inception, development, and when it makes it into later environments (or even production) it’s not quite what we’re looking for, yet it passed all previous quality gates? We’ve even elaborated the feature as a team, and all agreed on the outcomes, but chances are, there’s a disconnect between engineering and the business understood the requirements. Then it comes the time to review our work with the stakeholders and this is where the challenge begins, typically 2 things happen at this point:

- The engineer demos their feature, which may include walking through the the tests to raise confidence that the feature works and is covered by the test automation.

- The product owner checks the demo covers the acceptance criteria.

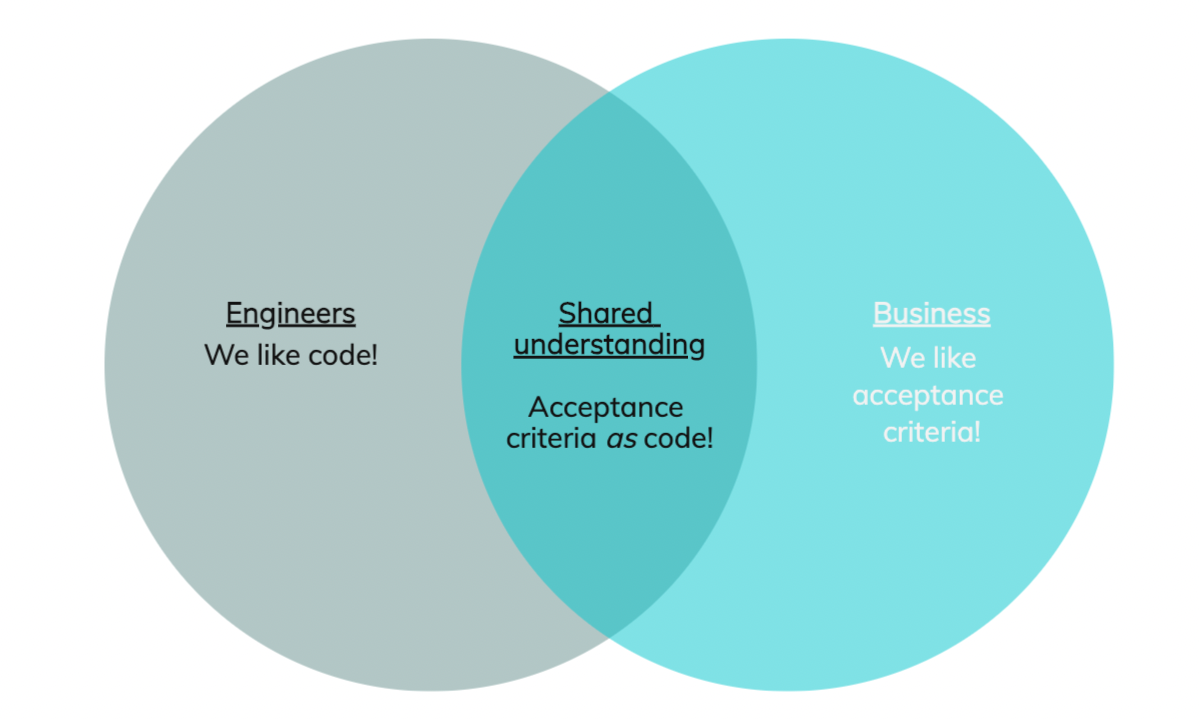

Is this really a good use of time? Why are we discussing requirements after a feature has started? Ultimately, it’s a communications issue, and we were able to solve these issues when we started to treat acceptance criteria as code, a living and breathing documentation of how the system should behave, that all parties can access and understand, Gauge helped us achieve this.

The challenges of testing complex systems

Often, in the early stages of a project when there’s a lot of rapid change, team dynamics are establishing and choice of tooling is up for debate, it is sometimes easier for the engineers to just go with what they’re familiar, be it mocha js, jest, or one of the other dozens of test frameworks. This may work perfectly fine whilst the product is light on features, it may be easy for the engineers to convey that X does Y when Z occurs to a stakeholder in the test framework of choice.

Fast forward several years, the system has expanded significantly in terms of size and complexity, and several authors have had their hands in the tests. You’ve now got a very complex set of tests in it’s own right, with differing styles of writing and “hacks” have been added for things that were too hard at the time, or needed a tricky workaround to get working. This brings us back to the 2 challenges as we now have:

- Tests that are hard to understand, especially for those who aren’t familiar with that service.

- Tests that are hard to demonstrate, very technical in nature.

- Tests that are hard to maintain, lots of duplicate or low level code intertwined with the logic.

- Tests that can’t be understood by the stakeholders without an engineer explaining what is happening.

- Tests may be rigid enough that it makes re-factoring, or even re-writing difficult.

That got us thinking, there just had to be a better way, there had to be a way to make the testing easier to write and easier to read, for all parties involved, so we all knew what the products were doing. The Versent code of “Be Better” started to kick in.

What about BDD? Behaviour-Driven-Development is a software development approach that focuses on clear communication by using a simple language to describe how software should behave. Teams collaborate to turn this into actual test code, which ensures that the software meets it’s acceptance criteria whilst also promoting teamwork and a shared understanding.

Gauge to the rescue

Gauge is an open source, BDD testing framework created by ThoughtWorks, it uses markdown to capture the test scenarios, which are supported by test steps that the engineers can craft in a variety of programming languages. In our case, for our customer we used typescript as it fit with our existing skillsets. Kudos to Versentonian Sri Venkatesh who first brought gauge into one of our products as a proof of concept, it became the catalyst for change across the program and is now used across several core business products.

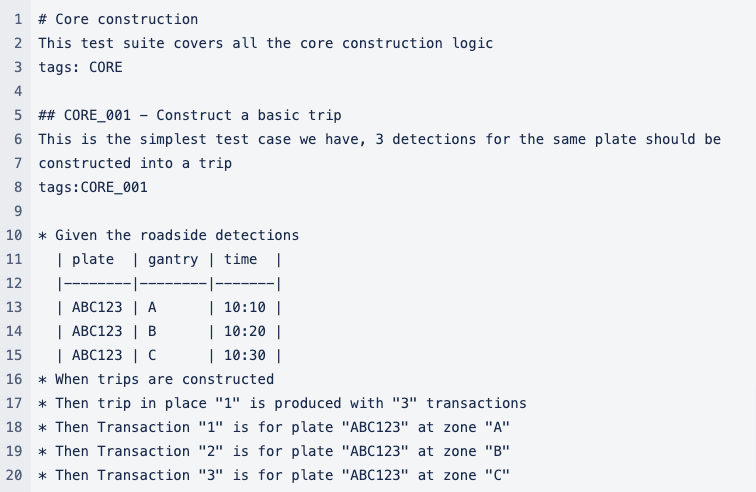

Here is a simplified version of a test in gauge, we’re in the road tolling domain here, so consider that we’re piecing together vehicle detections at individual roadside gantries into customer trips:

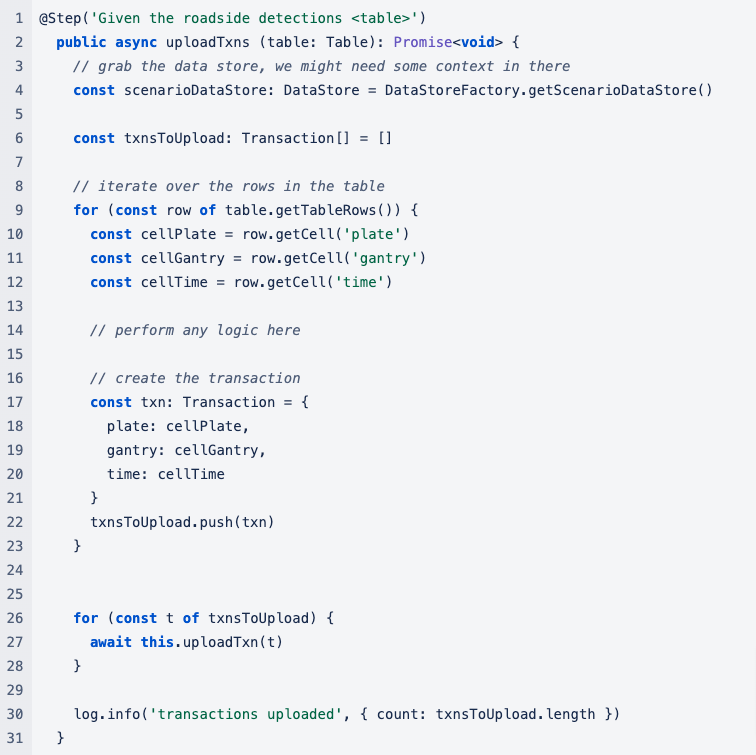

Each of these “statements” are mapped onto test steps, which are blocks of code that the engineers create and maintain. In this example, we have steps that would generate data, upload it, wait, and then assert an outcome. An example of a test step looks like this:

Even in it’s basic form, this is very powerful, we can build up a lot of variations from these basic building blocks, how does it behave with 20 transactions? What does it do if the plate is blank? What happens if we miss zone B?

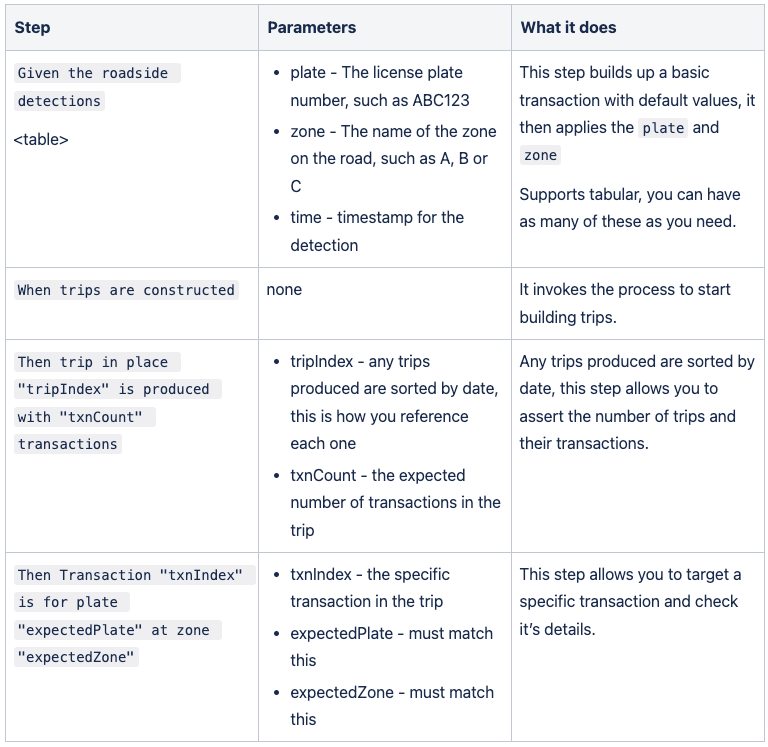

In our case we have a very complex system that is accepting drip-fed data, and when conditions are met, a portion of the data is processed under strict business criteria, we have crafted more complex steps to help us achieve this:

- Timeouts – sometimes tests need to wait a few seconds for data to get processed

- Salting – We isolate test cases by prepending salted values onto our reference data, we make use of the test setups and data sharing to do this.

- Environment setup – each of our tests cases are isolated, and require some environment setup, this enables each scenario to run independently, and in parallel, another fantastic scaling feature of gauge.

- Data sharing – each test runs with it’s own “scenario data store”, so data from one step can safely be shared across to another step. We use this when injecting data, and the save it to the scenario data store so we can use it for assertions.

- Orchestrating APIs – many of our test steps will invoke APIs, to create data, trigger events, request data, or check outcomes.

When a new requirement came up that the tests couldn’t do, the engineers would code it, and then add it into our test dictionary on confluence, it looks a bit like this:

The product owners could then use the dictionary to define very rich acceptance criteria, on the Jira card itself. This improved our elaboration and estimation sessions as the engineers could clearly see what the expectations were. The engineers could copy & paste these tests into the code base and start making them go green.

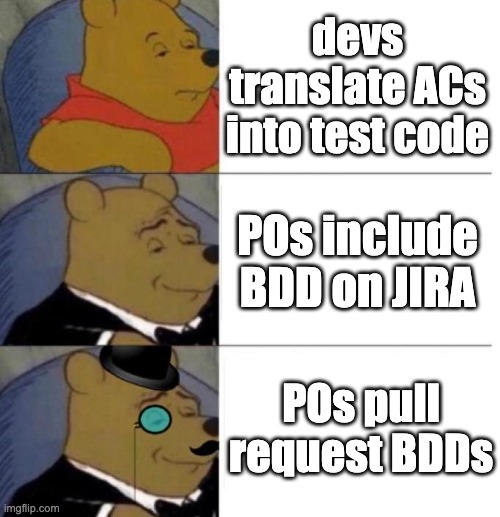

So we’ve got some new tooling, great, but how did that improve our process? We needed to introduce some change to how we operated as a team, we did this in a number of stages:

- Stage 0 – Engineers read the ACs on a card, interpret this into test code. This is what we wanted to move away from

- Stage 1 – POs (with support from engineers initially) define the BDDs on the Jira cards, engineers then copy/paste into github

- Stage 2 – POs author pull requests directly on github with their new / updated BDDs, engineers can then make it go green as part of their work.

There would be times when the test framework wouldn’t support a new feature, such as needing a new API to be invoked, the engineers could implement the new test steps and make available in the dictionary.

Closing Thoughts

Treating acceptance criteria as code has made a positive impact, we’ve been live in 2 countries for 3 years now and have not had a single severity 1 or 2 issue. I would absolutely consider this on future engagements. Having acceptance criteria documented in a place that all parties can access and understand is paramount to success. We can’t have requirements scattered across Jira or sharepoint documents, or in peoples heads, it must be defined as a contract. Tooling wise, Gauge made sense in 2019 when we first explored this, however if I were to do this again I would consider alternative test frameworks as Cucumber as there are implementations in more languages so we could align our application and test code.