How to Add Custom LLM Models to Splunk MLTK

Ilya Reshetnikov

Solutions Architect

Ilya Reshetnikov

Solutions Architect

A REST API approach to extending your model options beyond the pre-configured list

Splunk MLTK 5.6.0+ allows you to configure LLM inference endpoints (putting the powers of LLM right at your (SPL) fingertips), but the list of available models is somewhat limited. Below, I’ll explain how you can add new LLM models to Splunk MLTK.

The Issue

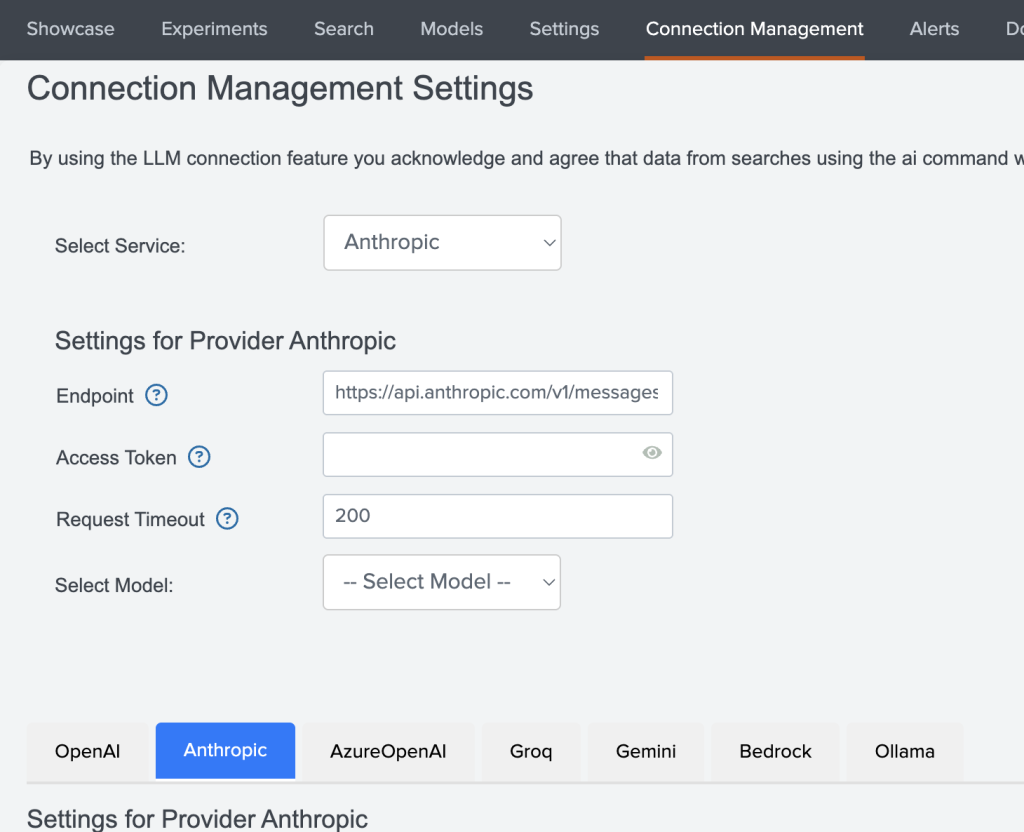

You can configure any of the pre-added models in the Splunk UI by going to the MLTK App and then hitting the “Connection Manager” tab.

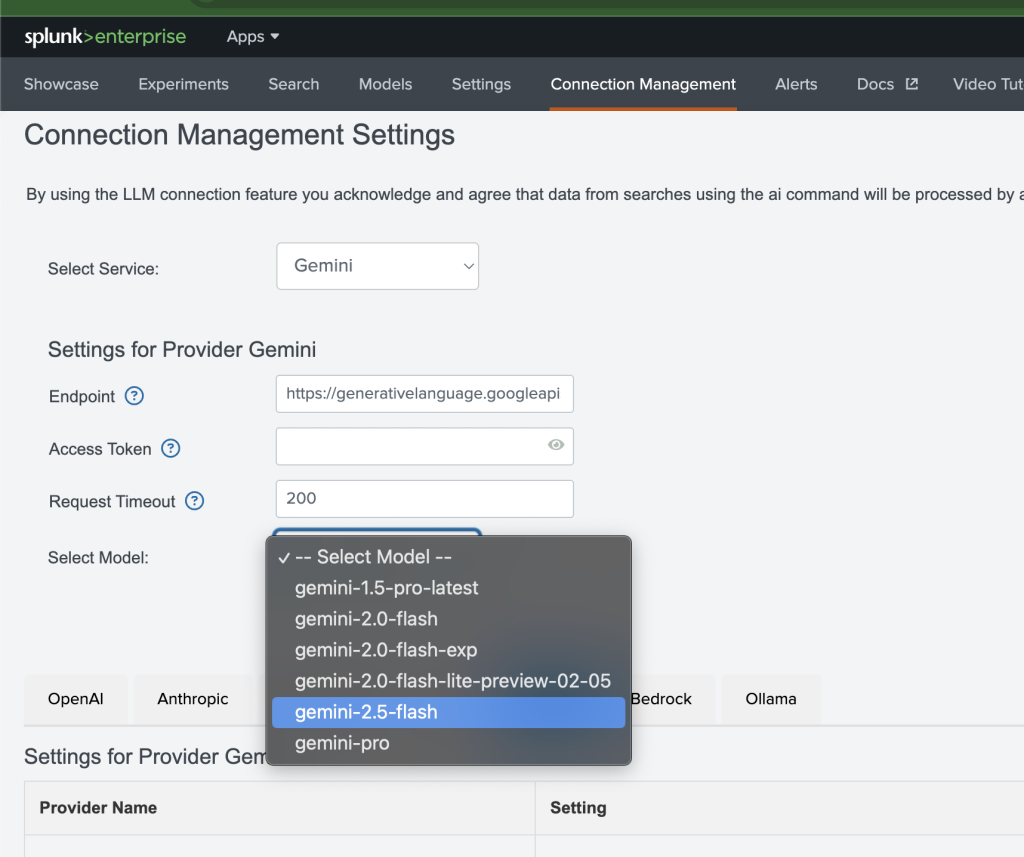

When you select a service, you can see a list of pre-defined models. These are already somewhat outdated; for example, for Gemini, you don’t have any of the 2.5 models.

So, “how do we add new LLM models to Splunk MLTK?” you might ask.

The Solution

Easy-ish …

A bit of background

This configuration is managed in a Splunk KV Store collection (named mltk_ai_commander_collection), and in essence, it’s a big JSON that has all the providers and the models.

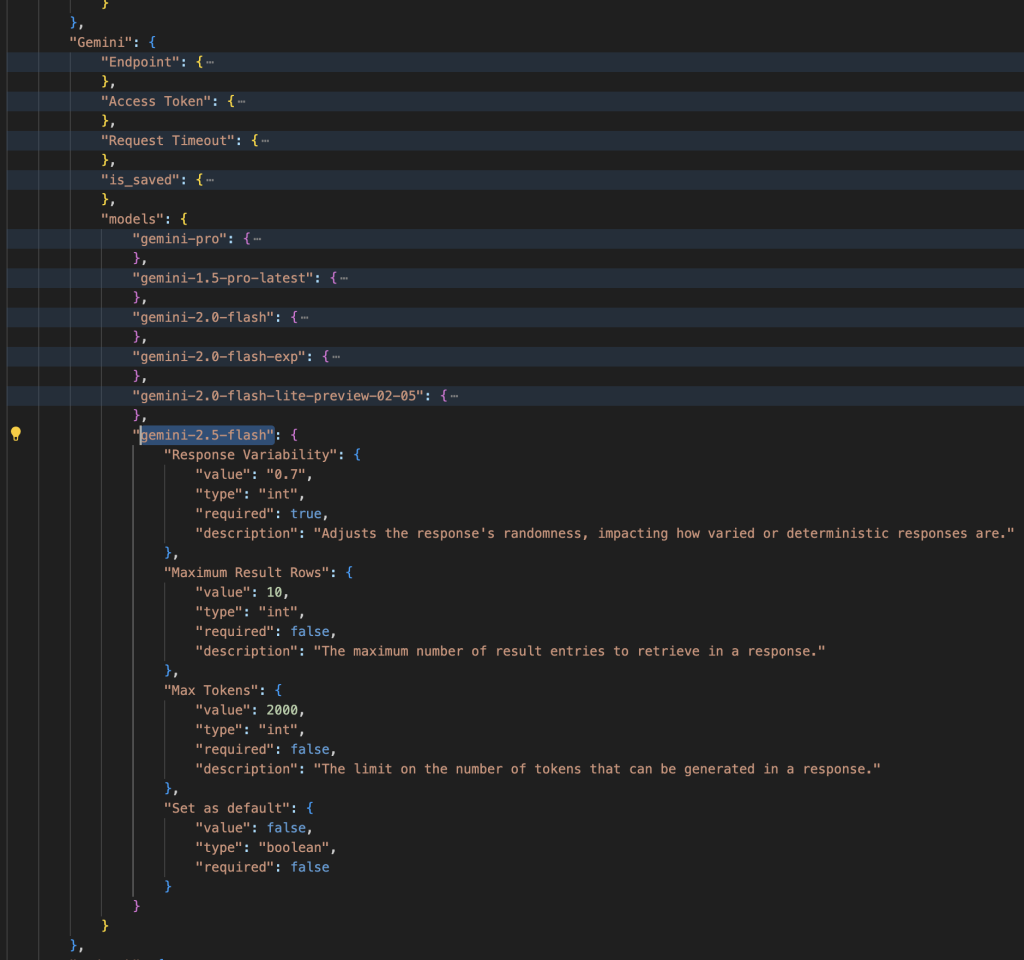

For example, here is the snippet for the Gemini Service and the first of its models:

...

"Gemini": {

"Endpoint": {

"value": "https://generativelanguage.googleapis.com/v1beta/models",

"type": "string",

"required": false,

"description": "The API endpoint for sending chat completion requests to Google's Gemini language model."

},

"Access Token": {

"value": "",

"type": "string",

"required": true,

"hidden": true,

"description": "The authentication token required to access the Gemini API."

},

"Request Timeout": {

"value": 200,

"type": "int",

"required": false,

"description": "The maximum duration (in seconds) before a request to the Gemini API times out."

},

"is_saved": {

"value": true,

"type": "boolean",

"required": false,

"description": "Is Provider details stored"

},

"models": {

"gemini-pro": {

"Response Variability": {

"value": 0,

"type": "int",

"required": true,

"description": "Adjusts the response's randomness, impacting how varied or deterministic responses are."

},

"Maximum Result Rows": {

"value": 10,

"type": "int",

"required": false,

"description": "The maximum number of result entries to retrieve in a response."

},

"Max Tokens": {

"value": 2000,

"type": "int",

"required": false,

"description": "The limit on the number of tokens that can be generated in a response."

},

"Set as default": {

"value": false,

"type": "boolean",

"required": false

}

}

}

}

...

So if we want to add a new model, all we need to do is add another element to the models array. Now, here’s a big disclaimer, i.e., there might be a better/easier way to do that, which might not be supported. Some models might not necessarily work with this approach, etc.

While there is a Lookup Editor app, it will only help you (to edit KV store collections) if there is a lookup configured for it, which is not the case for the mltk_ai_commander_collection one.

High-level steps

Another way (and the one we will take) is to use Splunk REST API, and at a high level, it consists of the following steps:

- Get the current configuration (and the

_keyof the collection item) in a JSON format. - Update in a text editor the JSON payload.

- Update the KV collection with the new JSON.

Detailed steps

I will provide examples using Postman, but you can use curl or any other method of your choice for interacting with the REST API.

1. Get the current configuration

Run a GET call to the collection/data endpoint:

The actual URL is the following:

https://localhost:8089/servicesNS/nobody/Splunk_ML_Toolkit/storage/collections/data/mltk_ai_commander_collection

Copy the results and take a note of the _key at the end of the JSON.

2. Update the JSON

Paste the JSON in a text editor of your choice. Go to the Provider for which you want to add a new Model (Gemini) and, in our case, duplicate the model object inside the Service object and change the model name.

For example, here I copied and pasted the gemini-2.0-flash section to the end of the Gemini service object and renamed it to be gemini-2.5-flash:

Note: Ensure that the model name you provide here matches exactly the one that appears when calling the inference API for the LLM Service.

For example, for Gemini:

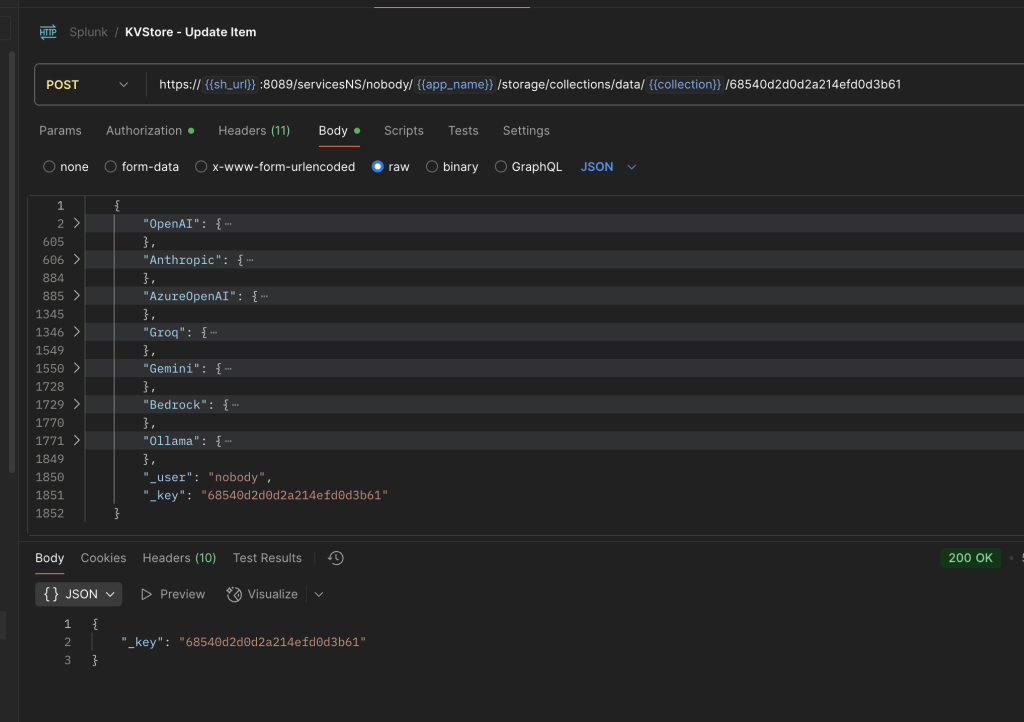

3. Update the KV collection

Now we need to update the collection with the updated JSON payload.

Send a POST request to the collection/data endpoint:

- replace the

_keypart of the URL with the value that you have in your JSON - remove the square brackets (

[]) that surround the JSON

The actual URL is something like the following:

https://localhost:8089/servicesNS/nobody/Splunk_ML_Toolkit/storage/collections/data/mltk_ai_commander_collection/68540d2d0d2a214efd0d3b61

Now, refresh the Connection Management page and enjoy a fresh new model at your disposal:

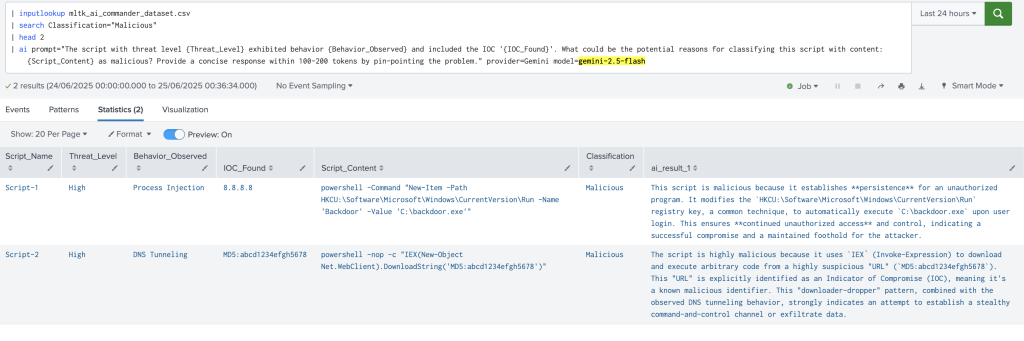

Use the new model in the | ai command:

P.S.

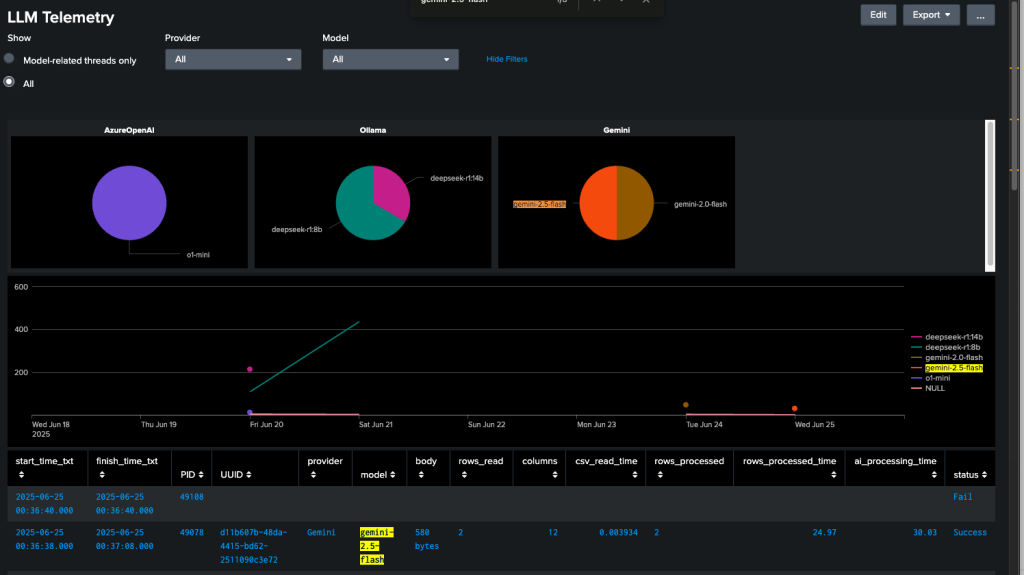

Here is a sneak peek into an LLM Telemetry dashboard I’m working on:

I hope that this blog post helped you to understand how to add new LLM models to Splunk MLTK.

Originally published at https://isbyr.com on June 24, 2025.