AWS Step Functions vs AWS S3 Batch Operations

Richard Keit

Principal Solution Architect

Richard Keit

Principal Solution Architect

The reasons behind choosing AWS Step Functions or AWS S3 Batch Operations for a AWS S3 Glacier restore process.

AWS Glacier storage tiers are highly redundant storage tiers that provides the most cost effective solution for long term data archival and a low cost backup approach. AWS Glacier offers three storage tiers: Glacier, Glacier Deep Archive, and Glacier Expedited. Glacier is designed for infrequently accessed data with retrieval times of minutes to hours. These tiers allow users to choose the right balance of durability, availability, retrieval times, and costs for their data archiving requirements.

In this blog, we’ll explore how the AWS S3 Glacier retrieval process works and provide two AWS service options that can be used in this process: AWS S3 Batch operations and AWS Step Functions. We’ll also explore the advantages against the particular use cases each service is best suited for.

AWS S3 Batch Operations

AWS S3 batch operations is a managed solution for performing operations against S3 Objects at petabyte scale with a single request, specifying :

- Inventory report (list of objects)

It might take up to 48 hours to deliver the first report.

2. Action to perform with relevant parameters

- Copy objects

- Delete/replace object tags

- Restore objects etc

AWS Step Function

AWS Step Functions allows developers to design and execute complex workflows (visually) using SDK integrations to AWS services in a scalable and reliable manner. AWS Step Functions have seen use cases from microservice orchestration to data processing, powered by the different workflow types:

- Standard workflow: with execution time up to 1 year, this allows developers to codify event driven business logic while waiting for external stimulus for example with inputs, outputs and transformations available at the task level

- Express workflows: with execution time up to 5 mins, it is ideal for high volume data processing such as IoT or ETL processes. Step Functions has over 17 optimised AWS integrations and 200+ AWS SDK integrations and with input methods such as API Gateway or AWS EventBridge, have seen it replace lambda as the “glue” between services.

AWS Glacier Restore Process

AWS S3 storage tiers allow customers to save significantly on storage charges for objects that require long term archival or backup at a low cost. Objects can be automatically lifecycled to different storage tiers based on various factors including size, age, tagging values and/or naming prefix. When retrieving objects from glacier storage tiers (there are associated retrieval costs based on expediency), this provides a copy of the data temporarily – which after this period the copy of data is deleted.

Scenario: Objects Prematurely Archived

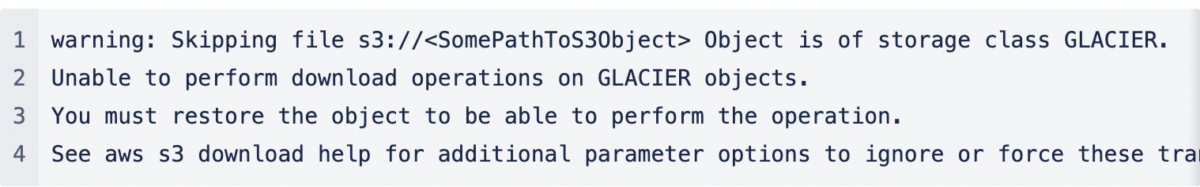

The scenario we’ll explore is when objects are prematurely being transitioned to AWS Glacier storage tier, which causing the integrated application to encounter issues like the below:

The above issue was causing issues with the applications ability to successfully perform backups, therefore resolution time was of time priority.

S3 Object lifecycle

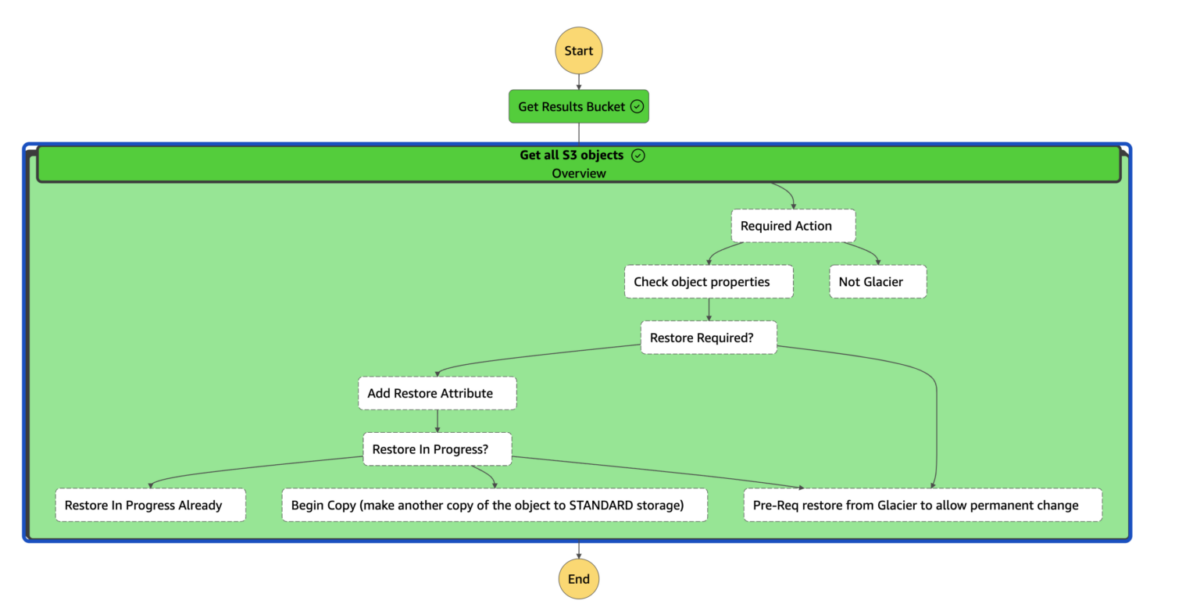

Using the Step Function graph to illustrate the business logic:

- “Required Action”: First task uses the object metadata to determine if the object is currently in GLACIER storage or not, if it is not – the iteration completes (with no action required)

- “Check object properties” : This task uses the

s3:HeadObjectto get additional metadata required to determine if the object has a restore action already in progress. As the value of theRestorekey has a comma separated response, it is spliced in “Add Restore Attribute” to allow the ability to make decisions. - “Restore in Progress”: using the data from the

Restorekey, this Choice task will:- “Pre-Req restore from Glacier to allow permanent change”: this initiates the temporary restore from glacier to allow instance access.

- “Restore in Progress Already”: End the iteration if the temporary object restore has been already initiated.

- “Begin Copy” (make another copy of the object to STANDARD storage):

s3:CopyObjectAPI to make a long term copy of the object (from the temporary restore copy)

Engineered solution to restore objects from S3 Glacier

Above AWS Step Function has been published on Serverless land for public consumption: Serverless Land

Why did we use AWS Step Functions in this instance over AWS S3 Batch Operations?

There were a few primary reasons why our team leveraged AWS Step Functions, including:

| Reason | AWS Step Functions | AWS S3 Batch Operations |

|---|---|---|

| Time to first action | As soon as the Step Function is deployed, actions can be completed and continue to iterate on design | As a precursor to any AWS S3 Batch operation, an inventory report is required as a manifest – the delivery options for this report once setup is daily or weekly, noting the first report can take up to 48hrs for first delivery. |

| Service familiarity | The engineering teams had deep experience with the AWS Step Function with both standard and express workflows, including a comprehensive understanding of issuing permissions based on principle of least privilege. | While the engineering team was comfortable with AWS S3, there was no experience specifically with the batch operations functionality (while the documentation appears straight forward). In conjunction with the possible large iteration time in developing a solution (based on the inventory report delivery time), the team did not want to develop in the uncharted waters with the increasing impact on the RPO of the application. |

| Data set size ( > 150,000 objects) | There is no limit defined for distributed map (will elaborate below), engineers need to be cognisant of API limit quotas when interacting with other services. | AWS S3 Batch operations boasts petabyte scale operations while managing the retry consideration, process tracking and report generation. |

Design considerations

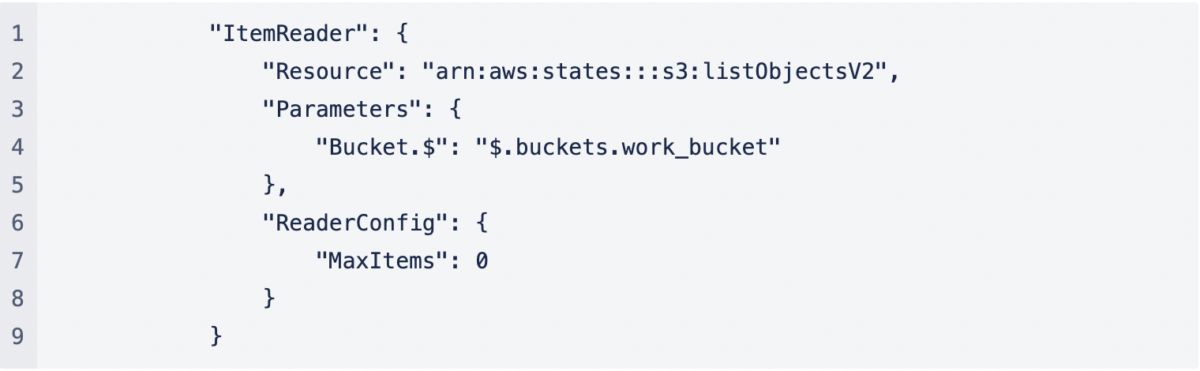

Consideration 1: List all objects in S3

With the release of the distributed map functionality, engineers are not constrained by requiring to manage input payload size limits or a parallelism quota of map functionality (40). ItemReader manages the pagination of all objects in a S3 bucket which passes each item to the ItemProcessor

Whereas, ItemProcessor is where the business logic can be codified

Consideration 2: API limits

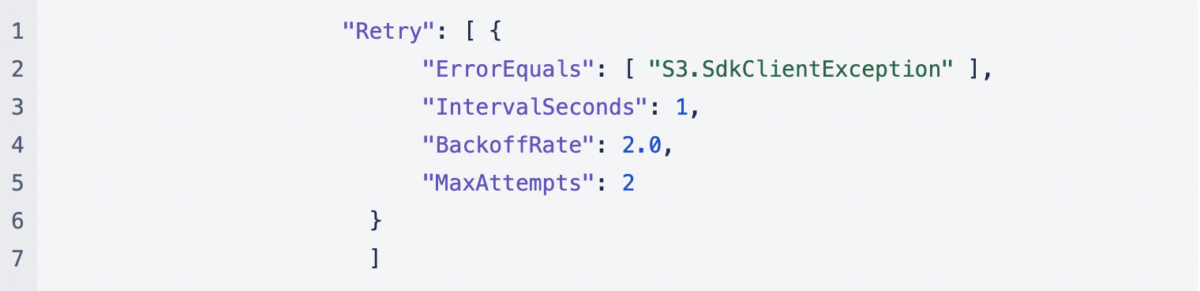

With any interaction of AWS services, there are invocation limits that should be considered and monitored when in use. AWS Step Function integration with AWS SDKs emits metrics in the way of Service Integration Metrics. While AWS S3 Batch operations managing the concurrency and retry logic, AWS Step Functions require explicit thought in this process, in this example crude retry logic was added on the S3 operations:

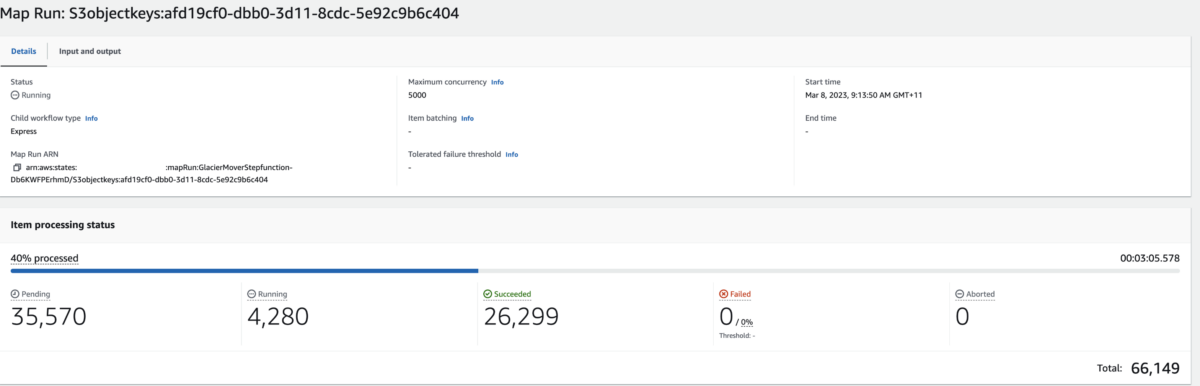

Observability of the iterations

With the release of the distributed map functionality, the service team introduced a new view which summarises the iterations under the ItemProcessor task. At a glance, it shows operators the configuration for the execution and the below iteration data:

- Pending items: based on queuing and configured batching, items yet to be selected for processing

- Running: items that currently being processed by an express workflow

- Succeeded: items that have been successfully executed through the workflow

- Failed: items that have not successfully exited the workflow. Failure thresholds can be configured on a count or percentage of items basis, with both supporting dynamic values based on the path specification.

- Aborted: When the failure threshold has been breached, all items moved to this status.

When deciding between AWS Step Functions and S3 Batch Operations for the AWS Glacier restore process, consider the task’s complexity. Use AWS Step Functions for multi-step workflows with conditional branching and human intervention. Use S3 Batch Operations for simpler tasks, such as bulk operations on S3 objects or mass restores of Glacier objects. The choice depends on the specific requirements of the restore process. We’d love to hear if you’ve needed to develop a similar solution or if you have some feedback on the approach followed in this implementation.