A Gentle Introduction to AWS Network Firewall: Part 2

Steve Jones

Principal Solutions Architect

Steve Jones

Principal Solutions Architect

Part two of a three-part series on AWS Network Firewall’s capabilities, architecture and operational considerations

Introduction

Welcome back to our series on the AWS Network Firewall. In Part 1, we covered the components and architecture. In Part 2, we explore common implementation patterns for the AWS Network Firewall and key design decisions. We’ll cover scenarios and best practices to help you effectively deploy the AWS Network Firewall to meet different security and organisational requirements.

Establishing a perimeter

In an Enterprise Multi-Account AWS Architecture, establishing a perimeter involves creating layers of protection around your cloud infrastructure to safeguard network resources, control traffic, and enforce security boundaries.

This perimeter is part of a defence-in-depth approach to defending against unauthorised access, preventing malicious traffic, and ensuring the secure operation of your workloads.

AWS Network Firewall is a key component of this perimeter, along with Virtual Private Clouds (VPCs), security groups, and network access control lists (NACLs).

Perimeter protection uses network isolation strategies, such as private subnets, AWS Transit Gateway, and AWS PrivateLink, to segment and control traffic between different parts of your network for both internal and external sources.

This approach directly aligns with the Security Pillar of the AWS Well-Architected Framework, which emphasises protecting network resources and implementing network isolation.

Centralised or Decentralised

The deployment model for ingress and egress internet traffic is a critical design decision. Egress will provide connectivity to the Internet for workloads, whereas ingress will determine how you present endpoints and services to external users.

Choosing between a centralised or decentralised approach has significant operational, security, and cost implications. Both strategies have distinct advantages, but the decision hinges on control, complexity, governance and cost.

The two don’t have to align; you can have central egress but decentralised ingress, vice versa, or a blend of them. However, it generally aligns with the organisation’s operating model to support and manage AWS workloads.

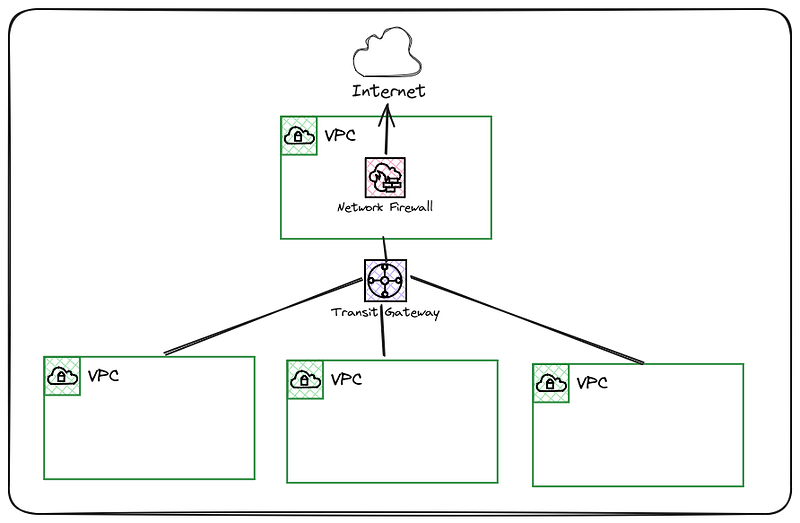

Centralised Egress

In a centralised design, all traffic destined for the Internet will traverse a single firewall exit point. This is achieved through routing configuration within VPC and Transit Gateway route tables.

This model is often initially favoured because it is familiar with the traditional on-premises approach to internet access. Operational teams are usually set up to support this model.

Some of the benefits include:

- Simplified Management—A centralised model allows you to manage the egress point and firewall policies from a single location, reducing the overhead of maintaining multiple firewall instances. A central network or platform team often performs this function.

- Enhanced Security — Traffic flows through an agreed exit point, making enforcing consistent security policies and auditing easier.

- Cost Efficiency — A single Network Firewall with endpoints across multiple Availability Zones for availability can serve multiple accounts, potentially reducing costs for infrastructure and administration.

- Visibility & Control — One place to monitor and control all outbound traffic, improving observability and enabling centralised alerting.

Some of the negatives include:

- Single Point of Failure — Centralisation can introduce risks if not adequately architected for resilience, so a central network firewall should have endpoints in multiple availability zones. However, bad configuration updates could still have significant impacts if not managed with the appropriate testing and validation before deployment.

- Increased Latency—Requests will experience some additional latency as they traverse through the Transit Gateway and the Network Firewall Endpoints; however, this is generally no more than a few additional milliseconds.

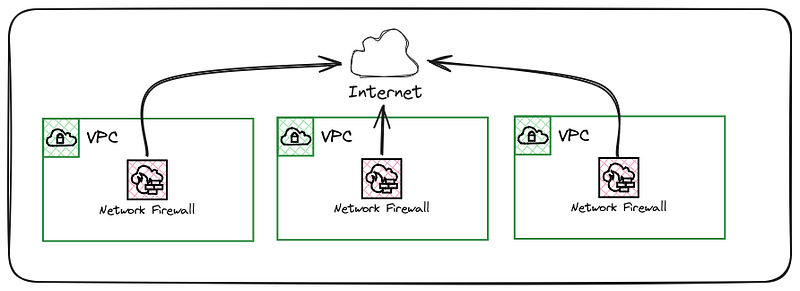

Decentralised Egress

In a decentralised design, traffic destined for the Internet has multiple exit points. This is common in product teams that own all supporting resources for their workloads.

The product teams are responsible for all aspects of the Network Firewall, from provisioning, rule management and monitoring.

Some of the benefits include:

- Improved Performance — Localised egress points reduce the need to route traffic across Transit Gateway, lowering latency and improving performance.

- Reduced Dependency on Central Systems — Each VPC manages its firewall policies, reducing the risk of a central system failure affecting multiple services.

- Scalability — Decentralising egress points allows for easier horizontal scaling, as traffic isn’t funnelled through a single point.

Some of the negatives include:

- Complexity — Managing firewall rules and egress points across multiple accounts or VPCs increases the operational burden for supporting teams. Ensuring consistent security policies across all locations becomes more challenging, however AWS Firewall Manager was designed to address this.

- Higher Costs—Decentralised egress requires more Network Firewall Endpoints, which increases costs. The Network Firewall has an hourly charge for each endpoint and data volume processing costs.

- Fragmented Visibility—Decentralising may mean losing the unified visibility and control centralisation provides, requiring more effort to ensure compliance and monitoring.

Making the decision

Determining the suitable egress model is highly organisation-specific and depends on various factors. A decentralised model can offer better performance and scalability if your applications need performant access to internet endpoints. On the other hand, if security and compliance are critical, centralised egress provides consistent firewall policies and simplifies auditing and governance.

Cost is also a consideration — centralised egress typically reduces infrastructure costs for smaller applications, while decentralised ingress requires more resources and increases complexity. Lastly, your team’s operational capacity should be taken into account. If they need the autonomy to control the end-to-end traffic flow and can handle a more complex infrastructure, decentralised ingress might offer the flexibility and performance benefits required.

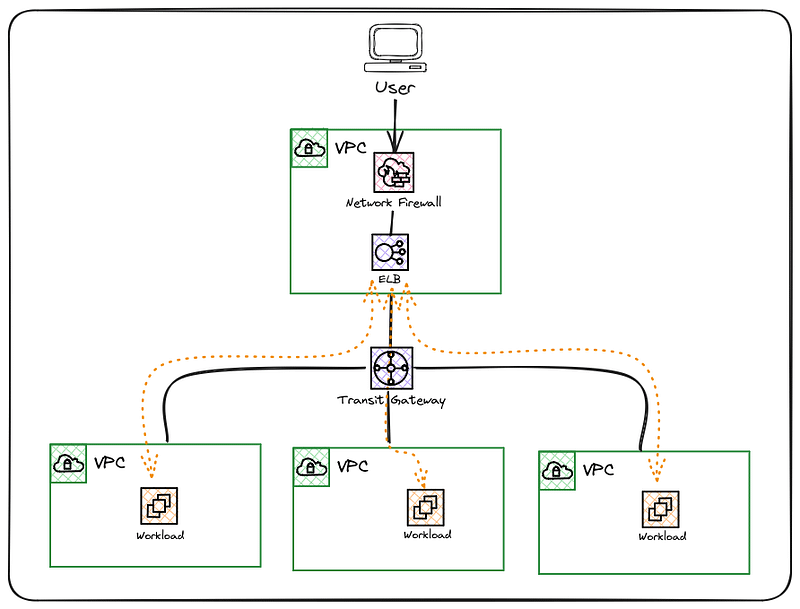

Centralised Ingress

Centralising Ingress establishes a central location to present all public endpoints. This is very often required for compliance reasons within an organisation; however, it will have some implications for how workloads can be accessed externally.

As the above diagram shows, additional resources such as Elastic Load Balancing are required to present the workload services via the Ingress Network Firewall. This is referred to as a Network Edge or Security Perimeter account that ensures only authorised services can be exposed externally.

Some of the benefits include:

- Unified Security Policies — Enforce consistent security policies across all applications and services accessed externally. This is ideal for environments requiring strict governance and compliance.

- Simplified Management—A centralised ingress makes managing rules and firewall policies easier. You can configure and monitor traffic at one point, reducing administrative overhead.

- Cost Efficiency—Running a single ingress point can be more cost-effective, as you avoid duplicating firewall instances and associated resources across multiple accounts. However, additional components such as Elastic Load Balancing will be required for cross-account communication.

- Enhanced Visibility — All traffic flows through a single location, simplifying monitoring, logging, and auditing.

Some of the negatives include:

- Single Point of Failure—Centralising ingress creates the risk of a single point of failure. If the centralised ingress point becomes unavailable, all inbound traffic is affected. Architecting for high availability is essential.

- Complexity — Multiple resources are now required to access the workload. The resources need to be configured and monitored appropriately. AWS services such as Elastic Load Balancing must be configured using IP targets as the targets are not part of the same AWS VPC.

- Reduced Agility—Per organisational policy, Workload teams are now dependent on additional configurations, usually outside their control. The platform or central network team usually controls the NetworkEdge account.

- Increased Latency—Requests will experience some additional latency as they traverse through the Transit Gateway and the Network Firewall Endpoints; however, this will be in the low milliseconds.

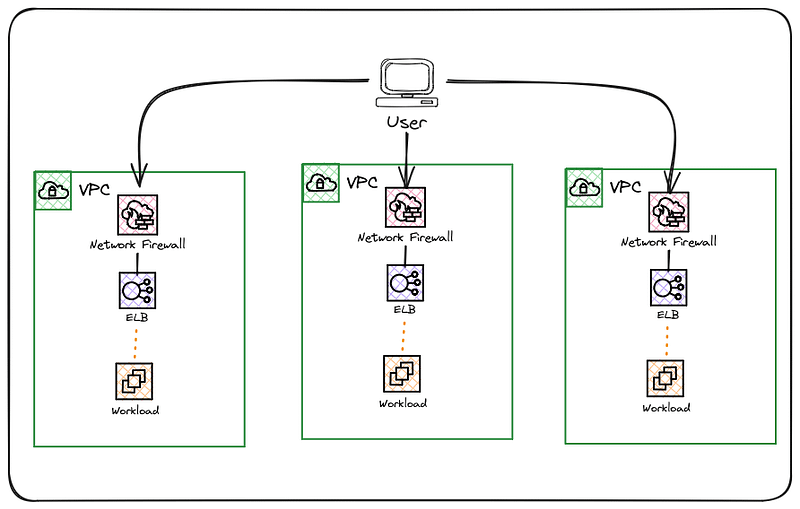

Decentralised Ingress

Decentralising ingress allows workload teams to operate autonomously, enabling them to have end-to-end control over how they present services externally.

The complexities of cross-account service access have been removed; however, the responsibilities of workload owners have also increased.

Some of the benefits include:

- Improved Performance—Allowing traffic to enter through multiple ingress points reduces latency and avoids the bottlenecks associated with centralisation.

- Reduced Blast Radius — If one ingress point is compromised, it’s isolated to a single segment of your infrastructure, reducing the overall impact on the rest of the network.

Some of the negatives include:-

- Operational Complexity—Managing multiple ingress points increases complexity. Each ingress point may require its own firewall rules, policies, and monitoring, and ensuring consistent security across these points can be time-consuming. AWS Firewall Manager was designed to address this.

- Higher Costs—Decentralised ingress typically involves setting up multiple instances of AWS Network Firewall and related infrastructure, which increases overall costs for deployment and maintenance.

- Fragmented Visibility — With multiple ingress points, it becomes harder to maintain centralised visibility and control. Monitoring and auditing traffic across several entry points can create blind spots if improperly handled.

Making the decision

As with egress, determining the suitable model is highly organisation-specific and depends on various factors. If security and compliance requirements dictate that a single entry point is required, centralised ingress provides this capability well. However, be mindful of the operational architectural implications for the teams and workloads they support that require external access.

East-West & On-Premise Traffic Inspection

While external network perimeter security is essential, many modern attacks originate within the network. East-west traffic inspection focuses on monitoring and controlling internal traffic flows between different resources and environments within AWS.

This can also be a consideration for organisations with established network connectivity through AWS Direct Connect to on-premise networks that need to control and monitor traffic to and from these networks and AWS.

AWS Network Firewall can be provisioned to meet both these requirements through the use of static and stateful rule groups targeted to specific CIDR ranges, ports, and routing configurations within Transit Gateway. These rules supplement the security groups and network access control lists (NACLs) with a defence-in-depth approach.

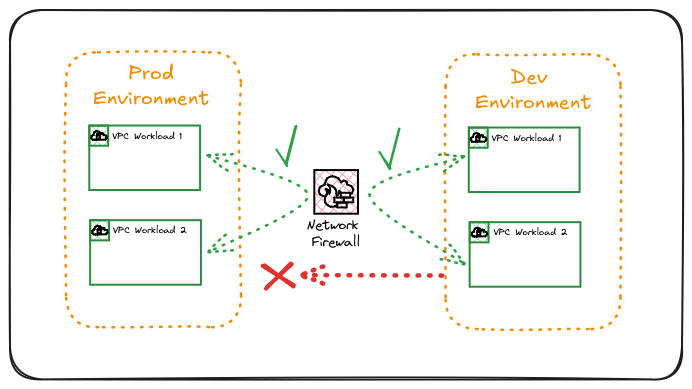

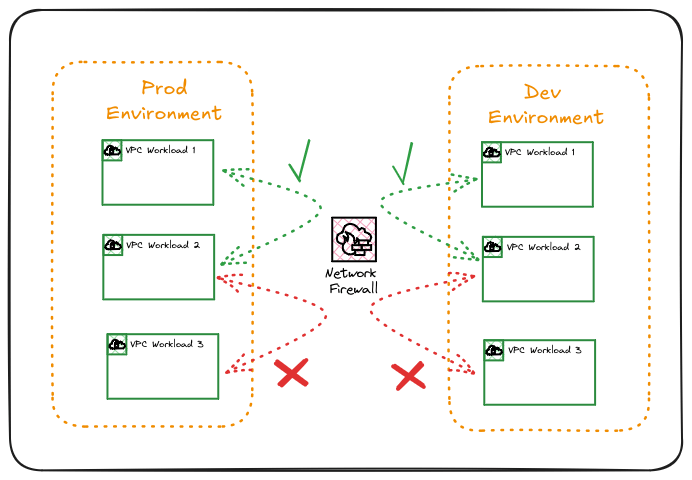

Inter-VPC Traffic Filtering

Inter-VPC traffic filtering involves inspecting traffic between VPCs to ensure that all communication complies with security policies. If required, this can be used to segregate environments.

In the above representation, Production Workloads can communicate with each other if the necessary security groups allow the communication, as can the Dev environment. However, the Dev environment cannot talk to the production environment as it may have less stringent patching and security monitoring.

This is implemented through rules within the Network Firewall policy; however, it could also be achieved using Transit Gateway Routes, ensuring no routing path between Production and Dev environments.

Using AWS Network Firewall for this configuration, however, enables complex rules to be implemented, not just controlling CIDR-to-CIDR communication but port or specific traffic flow scenarios across many VPCs or Private Subnets, depending on the requirements.

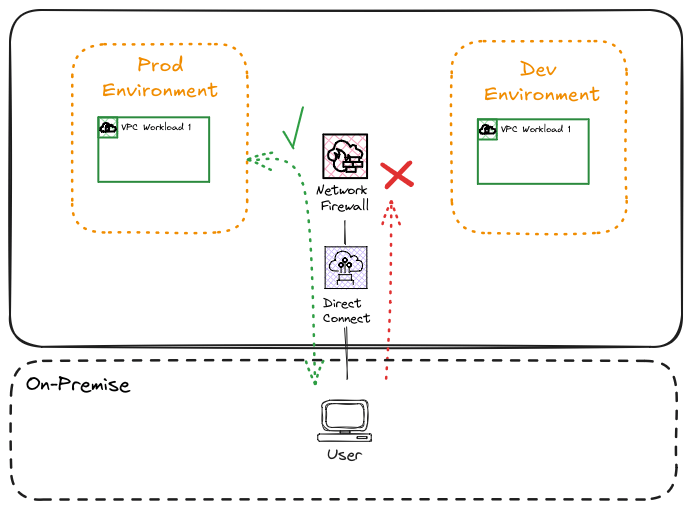

On-premise Traffic Filtering

A hybrid AWS network configuration establishes connectivity on-premise through AWS VPN or Direct Connect for dedicated bandwidth. Users can subsequently access workloads hosted in AWS over this link; however, access still needs to be controlled and limited.

In the above representation, on-premise users can access the necessary endpoints for the production environment; however, open access to the development environment is restricted by the AWS Network firewall. This could be further enhanced to only allow a subset of users who require access to test newer versions of a workload in the development environment.

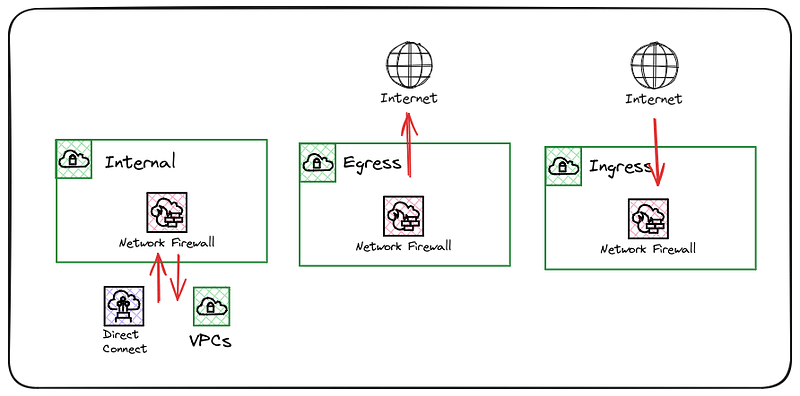

Firewall Functional Roles

While a single AWS Network Firewall can be configured to support all the above scenarios and deployment models, when it comes to firewall management, I’m a firm believer in the Unix philosophy of “do one thing and do it well” for maintaining clarity, simplicity, and security.

Ideally, each firewall should be configured to effectively perform a specific role, ensuring that all rules align with the firewall’s primary objective. This targeted approach reduces complexity and minimises the risk of misconfiguration.

An organisation may deploy the following firewalls and roles considering the centralised model.

- Ingress Firewall — Allow external access to authorised endpoints that should be exposed to the Internet.

- Egress Firewall — Allow internal hosts access to authorised internet endpoints.

- Internal Firewall — Allow access between authorised networks and environments, including the AWS and connected networks such as on-premise.

With the above in place, routing and rule group configuration are simplified and easily understood, which aids both operationally and troubleshooting when developing rules. This does, however, come at a cost due to the way the AWS Network Firewall is charged. The above configuration utilising all Availability Zones within a region would require 9 Firewall Endpoints that are charged by the hour before any data processing fees.

Some organisations consolidate the Egress and Internal Firewall to reduce this overhead, resulting in 6 Firewall Endpoints. Rule groups are then used to manage rules that serve a unified purpose. However, it’s essential to be aware that rule groups have upper limits on the number of rules they can contain.

Careful planning is needed to ensure your rule groups are scalable and stay within these limits. You can maintain an efficient, secure, and scalable firewall architecture by aligning all rules to a single purpose and managing rule groups within their constraints.

Conclusion

By understanding the typical implementation patterns of AWS Network Firewall, you can design a flexible and robust network security architecture that aligns with your organisation’s unique needs. While AWS Network Firewall offers a variety of deployment options, careful planning is crucial to ensure that your architecture not only meets security and compliance requirements but also integrates seamlessly with your organisation’s operational model.

This includes considering how your teams will collaborate within the Multi-Account AWS environment and ensuring clear roles and responsibilities across security, network operations, and compliance teams. Balancing security controls with operational agility is critical, as a well-architected firewall solution should empower teams to work efficiently without compromising security standards.

The thoughtful design of your AWS Network Firewall deployment will provide scalable, adaptable protection while supporting your organisation’s broader cloud strategy.